- What Is CI/CD Security?

- What Is the CI/CD Pipeline?

- What Is Insecure System Configuration?

- What Is Shift Left Security?

- What Is Executive Order 14028?

-

What Is Cloud Software Supply Chain Security?

- What is DevSecOps?

-

What Is Insufficient Flow Control Mechanisms?

- CICD-SEC-1: Insufficient Flow Control Mechanisms Explained

- Importance of Robust Flow Control Mechanisms in CI/CD

- Preventing Insufficiency in Flow Control Mechanisms

- Best Practices to Ensure Sufficient Flow Control in CI/CD

- The Impact of New Technologies on Flow Control

- Insufficient Flow Control Mechanisms FAQs

- What Is Poisoned Pipeline Execution (PPE)?

- What Is Ungoverned Usage of Third-Party Services?

- What Is Insufficient Pipeline-Based Access Controls?

- What Is Insufficient Logging and Visibility?

- What Is Insufficient Credential Hygiene?

- What Is Inadequate Identity and Access Management?

- What Is Improper Artifact Integrity Validation?

- What Is Dependency Chain Abuse?

-

Anatomy of a Cloud Supply Pipeline Attack

What Is DevOps?

In a traditional software development model, developers write large amounts of code for new features, products, bug fixes and such, and then pass their work to the operations team for deployment, usually via an automated ticketing system. The operations team receives this request in its queue, tests the code and gets it ready for production – a process that can take days, weeks or months. Under this traditional model, if operations run into any problems during deployment, the team sends a ticket back to the developers to tell them what to fix. Eventually, after this back-and-forth is resolved, the workload gets pushed into production.

This model makes software delivery a lengthy and fragmented process. Developers often see operations as a roadblock, slowing down their project timelines, while Operations teams feel like the dumping grounds for development problems.

DevOps solves these problems by uniting development and operations teams throughout the entire software delivery process, enabling them to discover and remediate issues earlier, automate testing and deployment, and reduce time to market.

To better understand what DevOps is, let’s first understand what DevOps is not.

DevOps Is Not

- A combination of the Dev and Ops teams: There are still two teams; they just operate in a communicative, collaborative way.

- Its own separate team: There is no such thing as a “DevOps engineer.” Although some companies may appoint a DevOps team as a pilot when trying to transition to a DevOps culture, DevOps refers to a culture where developers, testers and operations personnel cooperate throughout the entire software delivery lifecycle.

- A tool or set of tools: Although there are tools that work well with a DevOps model or help promote DevOps culture, DevOps is ultimately a strategy, not a tool.

- Automation: While very important for a DevOps culture, automation alone does not define DevOps.

DevOps Defined

Instead of developers coding huge feature sets before blindly handing them over to Operations for deployment, in a DevOps model, developers frequently deliver small amounts of code for continuous testing. Instead of communicating issues and requests through a ticketing system, the development and operations teams meet regularly, share analytics and co-own projects end-to-end.

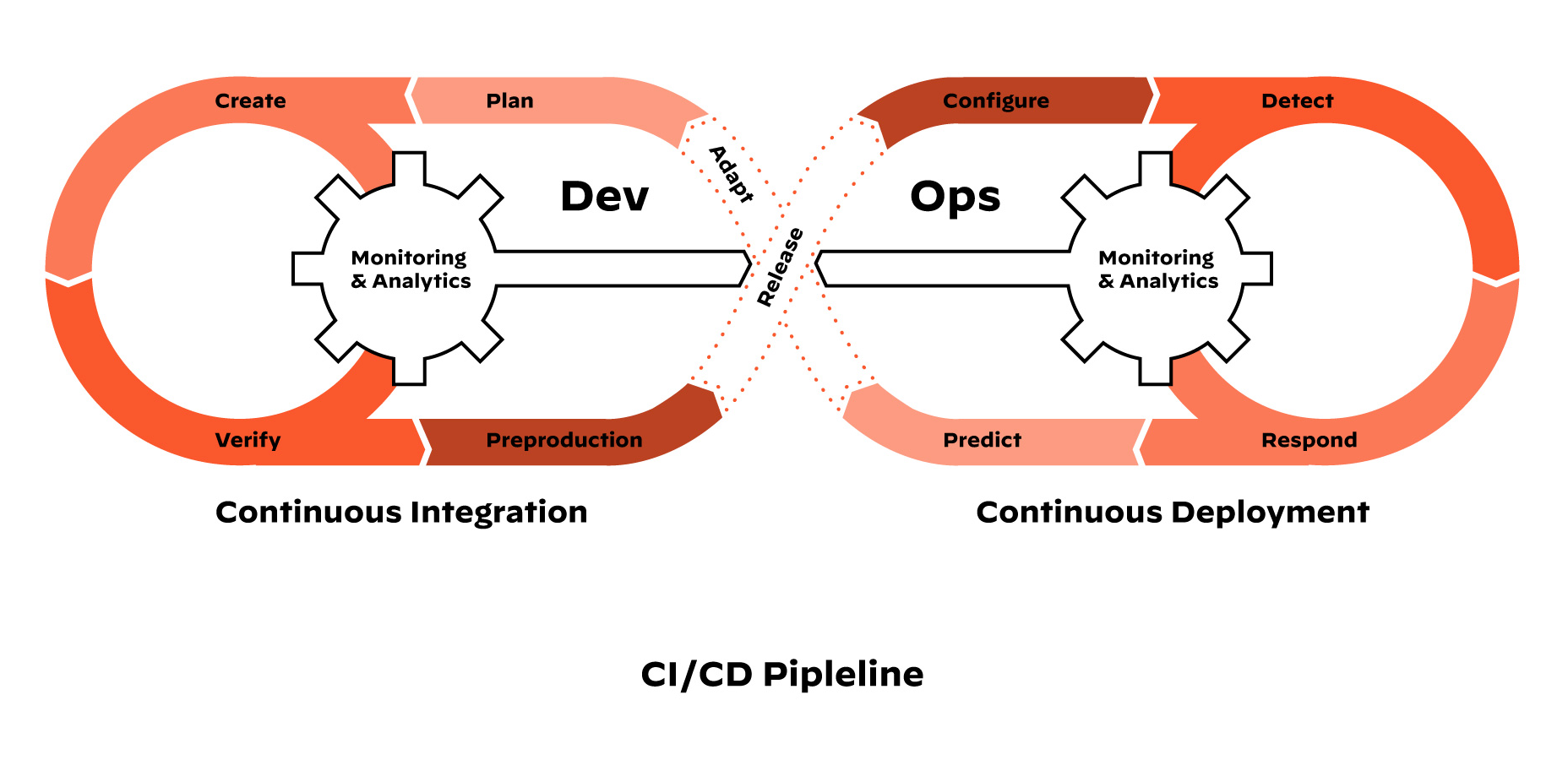

CI/CD Pipeline

DevOps is a cycle of continuous integration and continuous delivery (or continuous deployment), otherwise known as the CI/CD pipeline. The CI/CD pipeline integrates development and operations teams to improve productivity by automating infrastructure and workflows as well as continuously measuring application performance. It looks like this:

Figure 1: Stages and DevOps workflow of the CI/CD pipeline

- Continuous integration requires developers to integrate code into a repository several times per day for automated testing. Each check-in is verified by an automated build, allowing teams to detect problems early.

- Continuous delivery, not to be confused with continuous deployment, means that the CI pipeline is automated, but the code must go through manual technical checks before it is implemented in production.

- Continuous deployment takes continuous delivery one step further. Instead of manual checks, the code passes automated testing and is automatically deployed, giving customers instant access to new features.

DevOps and Security

One problem in DevOps is that security often falls through the cracks. Developers move quickly, and their workflows are automated. Security is a separate team, and developers don’t want to slow down for security checks and requests. As a result, many developers deploy without going through the proper security channels and inevitably make harmful security mistakes.

To solve this, organizations are adopting DevSecOps. DevSecOps takes the concept behind DevOps – the idea that developers and IT teams should work together closely, instead of separately, throughout software delivery – and extends it to include security and integrate automated checks into the full CI/CD pipeline. This takes care of the problem with security seeming like an outside force and allows developers to maintain their speed without compromising data security.